Imagine this: one day, your website is running smoothly, driving steady traffic, and then suddenly—poof! It’s gone from Google’s search results.

No warning, no clear explanation, just a sharp drop to zero visibility. Panic sets in. Your organic traffic vanishes, and you’re left wondering what went wrong.

If this sounds familiar, you’re not alone. Many site owners experience sudden deindexing due to reasons like hacking, spammy practices, or even simple configuration errors.

Unfortunately, Google rarely provides detailed guidance on how to fix this, leaving you to figure it out on your own.

But here’s the good news: getting your site reindexed is possible—and often faster than you think—if you take the right steps.

In this guide, we’ll walk you through a clear, actionable process to:

- Identify why your site was removed.

- Fix the underlying issues causing the deindexing.

- Request reindexing and regain your Google visibility.

- Prevent this from happening again in the future.

By the end of this article, you’ll have the roadmap to recover your website’s presence on Google and protect it from future penalties or removals. Let’s dive in!

Table of Contents

Why Does Google Remove Sites from Its Index?

When your site is removed from Google’s index, it can feel like a mystery. However, Google typically deindexes sites for clear reasons tied to their Webmaster Guidelines. Understanding these reasons is the first step toward resolution.

1. Hacked Site

If your site is hacked and serving malicious content like phishing pages or malware, Google will deindex it to protect users. This is one of the most common reasons for sudden removal.

2. Spammy or Low-Quality Content

Google prioritizes quality and authority in search results. If your site is flagged for:

- Thin, low-value content.

- Duplicate or scraped content.

- Auto-generated or keyword-stuffed pages.

It risks removal for violating quality standards.

3. Violation of Webmaster Guidelines

Using black-hat SEO tactics like cloaking, hidden text, or link schemes can result in penalties or outright removal from Google’s index.

4. Manual Actions

Google’s human reviewers might apply manual penalties for:

- Buying or selling backlinks.

- Hosting deceptive or harmful content.

- Failing to meet Google’s E-A-T standards (Expertise, Authority, Trustworthiness).

5. Accidental Deindexing

Sometimes, site owners unintentionally cause their own deindexing by:

- Adding a “noindex” tag to key pages.

- Blocking search engine crawlers in the

robots.txtfile. - Misconfiguring server settings.

The Impact of Deindexing

Deindexing can devastate your traffic and rankings. Beyond losing visibility, your brand reputation can also take a hit, especially if the removal was due to a hack or spammy behavior.

The good news? Most of these issues are fixable. Once you identify the root cause, you can take action to get back on Google’s radar.

Step 1: Identify the Reason for Removal

Before you can fix the issue, you need to figure out why your site was removed. This investigative step is crucial for taking the right corrective actions. Here’s how you can diagnose the problem:

1.1 Check Google Search Console (GSC)

Google Search Console is your primary tool for identifying indexing issues. Follow these steps:

- Look for Manual Actions:

Go to the Manual Actions section in GSC. If there’s a penalty, you’ll see a notification with details about what’s wrong (e.g., “Unnatural links to your site”). - Review Security Issues:

Visit the Security Issues section to check if your site has been flagged for hacking or malware. - Analyze the Index Coverage Report:

Navigate to Index > Coverage and review errors like:Crawled - Currently Not IndexedBlocked by robots.txtSubmitted URL Marked as Noindex

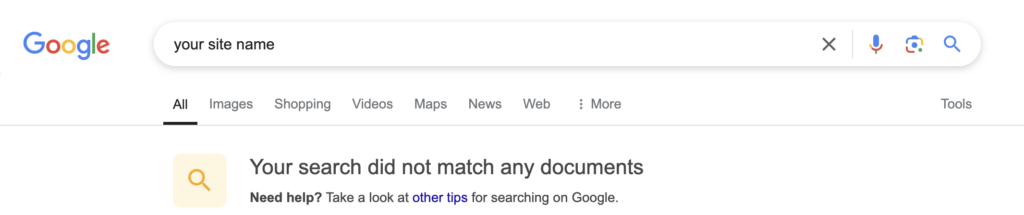

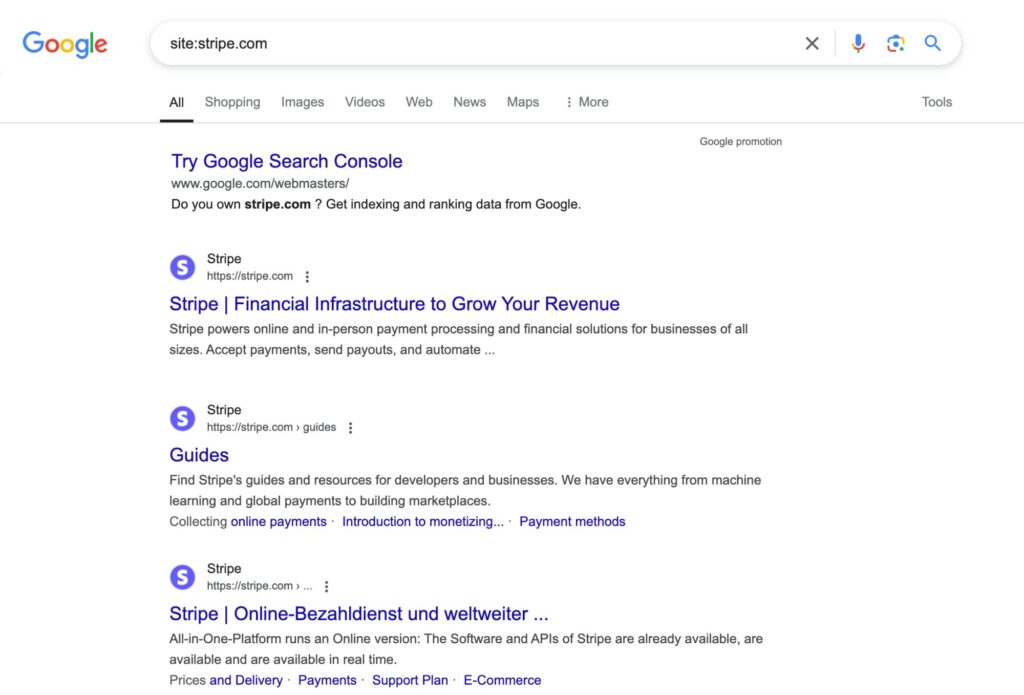

1.2 Use the Site Search Operator

Perform a Google search using the query: site:yourdomain.com

- No Results? This confirms that your site is deindexed.

- Partial Results? Only certain pages might be affected, which indicates a specific issue like a manual action or accidental noindexing.

1.3 Audit Recent Changes

Sometimes, site configurations or updates lead to unintended consequences. Check:

robots.txtFile:

Ensure it isn’t blocking Googlebot. For example:

User-agent: * Disallow: /- Meta Tags: Look for “noindex” tags on critical pages.

- CMS or Plugin Updates: Misconfigured SEO plugins (e.g., Yoast, Rank Math) can accidentally apply noindex directives or change site settings.

1.4 Analyze Traffic Patterns

Use Google Analytics or similar tools to review traffic drops:

- Sudden Drop? Indicates a penalty or removal.

- Gradual Decline? Points to broader SEO issues or algorithmic changes.

Once you’ve identified the root cause, you’re ready to move to the next step: fixing the problem.

Step 2: Fix the Underlying Issue

Now that you’ve identified the reason for your site’s removal from Google, it’s time to fix the problem. Addressing the root cause effectively is crucial for reindexing success.

2.1 Resolve Security Issues

If your site has been hacked or flagged for malware:

- Scan for Malware:

Use tools like Sucuri or Wordfence to detect malicious code or files. - Remove Malicious Content:

Delete infected files or code from your server. Update all software, plugins, and themes. - Secure Your Site:

- Install an SSL certificate (switch to HTTPS).

- Change all admin passwords and implement two-factor authentication.

- Use a Web Application Firewall (WAF) for ongoing protection.

- Request a Review:

In Google Search Console, go to Security Issues and request a security review after resolving the issue.

2.2 Address Manual Penalties

If Google applied a manual action:

- Unnatural Links:

- Identify spammy backlinks using tools like Ahrefs or Google’s Link Report in GSC.

- Use Google’s Disavow Tool to disassociate your site from harmful backlinks.

- Thin Content:

- Add value to low-quality pages by expanding them with original, in-depth content.

- Remove or merge duplicate or irrelevant pages.

- Keyword Stuffing or Cloaking:

Remove any instances of manipulative practices, such as hidden text, cloaking, or over-optimized content. - Submit a Reconsideration Request:

Explain the steps you’ve taken to resolve the issue in the Manual Actions section of GSC.

Also read: How Many Backlinks Per Day Is Safe? Avoid Google Penalties

2.3 Fix Accidental Deindexing

Sometimes, deindexing is caused by unintentional errors. Common fixes include:

- Check and Correct

robots.txt:

Ensure that the file allows search engine crawling. Replace:

User-agent: *

Disallow: /With:

User-agent: *

Disallow:- Remove “Noindex” Tags:

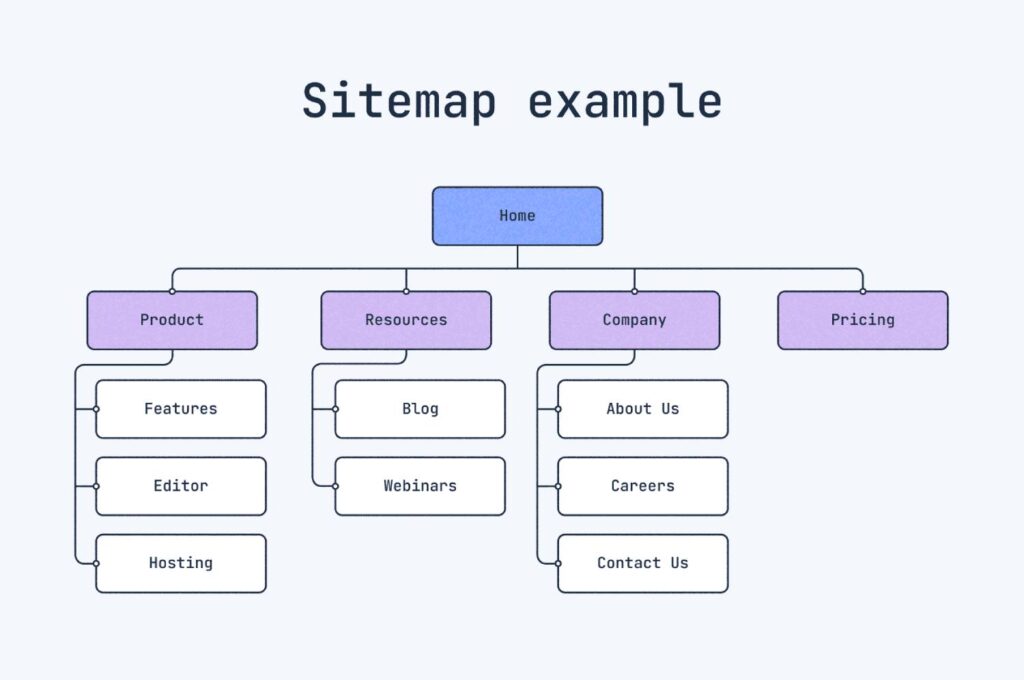

Inspect key pages for<meta name="robots" content="noindex">and remove this tag. - Update Your Sitemap:

Ensure your XML sitemap includes all important pages and submit it to GSC.

2.4 Improve On-Site SEO

Even if your site isn’t penalized, Google’s algorithms may deprioritize it if certain best practices are ignored:

- Fix Broken Links:

Use tools like Screaming Frog or Ahrefs to identify 404 errors and redirect them appropriately. - Optimize Content Quality:

- Ensure your content aligns with Google’s E-A-T Guidelines (Expertise, Authority, Trustworthiness).

- Create original, in-depth, and user-focused content.

- Enhance Site Speed:

Use tools like Google PageSpeed Insights to identify and fix performance bottlenecks. - Ensure Mobile-Friendliness:

Test your site with Google’s Mobile-Friendly Test tool and fix any issues.

Once you’ve fixed the identified issues, it’s time to let Google know your site is ready for reindexing.

Step 3: Request Reindexing via Google Search Console

After addressing the underlying issues, the next step is to ask Google to reevaluate and reindex your site. This process involves testing your fixes and submitting requests through Google Search Console (GSC).

3.1 Test Key Pages with the URL Inspection Tool

Before submitting your site for reindexing, ensure individual pages are error-free:

- Go to the URL Inspection Tool:

In GSC, paste the URL of the page you’ve fixed into the URL Inspection Tool. - Check for Errors:

- Review the status for warnings like:

URL Blocked by robots.txtCrawled - Currently Not Indexed

- If errors persist, revisit Step 2 to resolve them.

- Review the status for warnings like:

- Live URL Testing:

Use the Test Live URL feature to confirm that Google can now crawl and index the page.

3.2 Submit a Reindexing Request

Once a page passes the live test:

- Click the Request Indexing button in the URL Inspection Tool.

- Google will queue your request for review and recrawling.

For multiple pages, repeat the process for each URL.

3.3 Reconsideration Requests for Manual Penalties

If your site was penalized with a manual action, follow these steps:

- Navigate to Manual Actions in GSC:

Open the Manual Actions report to review flagged issues. - Submit a Reconsideration Request:

- Write a detailed explanation of the fixes you’ve implemented.

- Provide evidence (e.g., before-and-after screenshots, reports of removed spam links, or security updates).

- Be professional and concise. Avoid blaming Google or providing excuses.

- Wait for Google’s Response:

Manual reviews can take a few weeks. Keep an eye on GSC for updates.

3.4 Resubmit Your Sitemap

For site-wide issues, ensure Google crawls your entire site:

- Go to the Sitemaps section in GSC.

- Enter your XML sitemap URL (e.g.,

yourdomain.com/sitemap.xml). - Submit the sitemap for re-crawling.

3.5 Monitor the Process

Reindexing doesn’t happen instantly. During this phase:

- Track GSC Notifications: Google will notify you once your site or pages are reindexed.

- Analyze Search Traffic: Use Google Analytics or GSC to monitor traffic recovery.

- Watch for Crawling Activity: Check server logs to confirm Googlebot is crawling your site again.

By following these steps, you signal to Google that your site is ready for reindexing.

Step 4: Optimize Your Site for Future Safety

Recovering your site is one thing, but keeping it in Google’s good graces is another. To avoid future deindexing issues, follow these best practices to maintain a healthy and Google-friendly site.

4.1 Monitor Regularly

Consistent monitoring ensures you catch issues before they escalate.

- Google Search Console Alerts:

- Enable email notifications for manual actions, indexing errors, and security issues.

- Use Site Monitoring Tools:

- Tools like Pingdom, UptimeRobot, or Site24x7 alert you to downtime or unusual site behavior.

- Set Up SEO Audits:

- Perform monthly audits using tools like Ahrefs, SEMrush, or Screaming Frog to detect technical or content-related problems.

4.2 Strengthen Security

A hacked site is one of the fastest ways to get removed from Google’s index. Protect your site with robust security measures:

- Install SSL (HTTPS):

Secure your site with an SSL certificate to encrypt data and improve user trust. - Enable a Web Application Firewall (WAF):

Tools like Cloudflare or Sucuri can block malicious traffic and attacks. - Update Software Regularly:

Keep your CMS, plugins, and themes up-to-date to patch vulnerabilities. - Perform Regular Backups:

Use services like BackupBuddy or UpdraftPlus to ensure you can restore your site quickly after an attack.

4.3 Follow Google’s Webmaster Guidelines

Align your site with Google’s standards to avoid penalties:

- Content Quality:

- Publish original, high-value content that meets user intent.

- Update old posts to maintain relevance and accuracy.

- Link Practices:

- Avoid buying or selling links.

- Focus on building a natural backlink profile through quality content and outreach.

- Avoid Spammy Tactics:

- Say no to keyword stuffing, cloaking, or hidden text.

- Don’t use doorway pages or other deceptive practices.

4.4 Improve User Experience (UX)

Google prioritizes user-friendly sites in its rankings. Optimize yours by:

- Speeding Up Your Site:

Use tools like Google PageSpeed Insights or GTmetrix to identify and fix slow-loading pages. - Ensuring Mobile-Friendliness:

Test your site with Google’s Mobile-Friendly Test and make adjustments if necessary. - Enhancing Navigation:

Use clear menus, breadcrumbs, and internal linking to improve site structure and usability.

4.5 Create a Crisis Plan

Prepare for potential issues to minimize downtime:

- Maintain a Security Checklist:

Have a step-by-step guide ready to address hacking or malware. - Document Site Configurations:

Keep a record of yourrobots.txt, sitemap structure, and CMS settings for quick troubleshooting. - Partner with Professionals:

Work with a trusted SEO agency, like Derivate X, for ongoing technical audits and strategy support.

Key Takeaway

Google’s algorithms and guidelines evolve constantly, but by focusing on quality content, technical health, and robust security, you can protect your site from future penalties and enjoy consistent visibility in search results.

tl;dr

- Why It Happens: Google deindexes sites due to issues like hacking, spammy content, guideline violations, manual penalties, or accidental misconfigurations.

- Step 1: Identify the Problem: Use Google Search Console to check for manual actions, security issues, or indexing errors. Review

robots.txt, meta tags, and recent changes. - Step 2: Fix the Issues:

- Clean up hacked content and improve site security.

- Address manual penalties like spammy links or thin content.

- Correct misconfigurations like “noindex” tags or blocked crawling.

- Step 3: Request Reindexing:

- Use the URL Inspection Tool in GSC to test and submit pages for indexing.

- For manual penalties, submit a Reconsideration Request explaining your fixes.

- Step 4: Prevent Future Issues: Monitor your site regularly, strengthen security, follow Google’s guidelines, and optimize for user experience.

- Outcome: Recovery can take days to weeks depending on the issue, but with systematic fixes, your site can regain visibility and rankings.

Conclusion

Getting deindexed by Google can feel like a major setback, but with the right approach, it’s possible to recover and even strengthen your site’s performance. By identifying the root cause, addressing the issues, and proactively optimizing your site for long-term health, you can regain your search engine visibility and safeguard your digital presence.

The key is to act quickly and systematically:

- Diagnose the problem using Google Search Console.

- Fix underlying issues, whether they’re security-related, technical, or content-focused.

- Request reindexing and monitor the process.

- Optimize for the future to prevent deindexing from happening again.

If you’re facing challenges with penalty recovery or technical SEO, professional help can make all the difference. Reach out to Derivate X for expert guidance and long-term SEO solutions.

Frequently Asked Questions (FAQs)

How long does it take to get reindexed?

It depends on the severity of the issue and Google’s review timeline. Simple fixes (like accidental noindexing) may take a few days, while manual penalties can take weeks to resolve.

Will my rankings recover immediately after reindexing?

Not necessarily. Reindexed pages may take time to regain their previous rankings, especially if penalties impacted your site’s trust and authority. Focus on quality content and consistent optimization to rebuild rankings.

Can I contact Google directly for support?

Google doesn’t offer direct support for deindexing issues. The best way to communicate with Google is through Search Console and the Webmasters Help Community.

What happens if my reconsideration request is denied?

If your request is denied, carefully review Google’s feedback. It likely means some issues were not fully resolved. Make additional fixes and resubmit the request.

Should I start a new website if my current site is deindexed?

Starting over is a last resort. It’s often better to fix the issues with your current site, as starting fresh means losing all your existing backlinks, authority, and rankings.

What tools can help monitor site health?

Tools like Google Search Console, Ahrefs, SEMrush, and Pingdom can help you stay on top of indexing, security, and SEO performance.

Is it possible for only some pages to be deindexed?

Yes. Partial deindexing can occur due to issues like duplicate content, thin content, or specific pages being marked with noindex tags.

How do I avoid deindexing during a website redesign?

Before launching a redesign, test your changes in a staging environment. Double-check your robots.txt, meta tags, and sitemap to ensure they’re correctly configured.

Can a single bad backlink cause deindexing?

Unlikely. However, a pattern of spammy or unnatural backlinks can lead to penalties. Use the Disavow Tool in GSC to handle bad links.

What role does E-A-T play in indexing?

E-A-T (Expertise, Authority, Trustworthiness) is critical for maintaining strong rankings. Low E-A-T can lead to reduced visibility or penalties. Build E-A-T with high-quality content, credible backlinks, and a professional site presence.

GIPHY App Key not set. Please check settings

3 Comments