TL;DR (Too Long; Didn’t Read)

- LLMs.txt is like robots.txt but for AI models – It helps websites control how AI scrapers like OpenAI, Perplexity, and Google AI access their content.

- Why it matters – AI search engines are using content without attribution, reducing website traffic. LLMs.txt gives you control over AI-driven search results.

- How to use it – Create an

llms.txtfile, define which AI bots to allow or block, and upload it to your website’s root directory. - Limitations – LLMs.txt is voluntary, meaning AI models may ignore it. It doesn’t remove content already scraped, and it’s not a foolproof solution for content protection.

- SEO impact – Blocking AI bots can prevent content theft, but it won’t stop AI-generated summaries from affecting search traffic.

- Future-proofing – SEO strategies must evolve beyond blocking AI bots. Brand visibility, authoritative content, and AI-friendly optimizations will be key in an AI-first search world.

Imagine spending years building a content strategy, crafting high-value blogs, and dominating organic search—only to see AI models scrape your work and regurgitate it without attribution.

Frustrating, right?

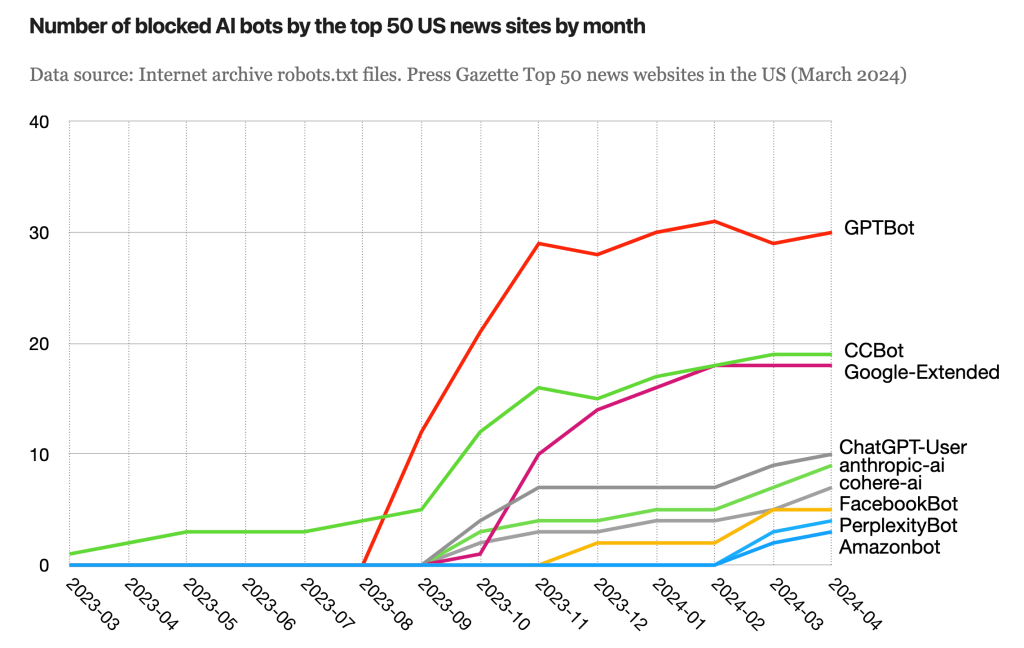

That’s exactly what’s happening today. Large Language Models (LLMs) like OpenAI’s ChatGPT, Google’s AI Overviews, and Perplexity AI are crawling the web, training on your content, and serving AI-generated answers—often without driving traffic back to your site.

Enter LLMs.txt, the latest tool in an SEO’s arsenal. It’s a simple yet powerful text file that allows website owners to control whether AI models can access and use their content. Think of it as robots.txt for AI, but instead of telling Googlebot what to index, it tells AI scrapers whether they can touch your content at all.

But why does this matter for SEO?

Because AI-driven search is rewriting the rules of organic visibility. With Google and other platforms shifting towards AI-generated answers, websites risk losing traffic if AI scrapes their content without attribution. LLMs.txt gives you a say in this evolving landscape—helping you protect your content, control AI access, and safeguard your SEO efforts.

In this guide, we’ll break down what LLMs.txt is, how it works, and whether you should implement it on your site. Let’s dive in.

Table of Contents

What Is LLMs.txt?

LLMs.txt is a newly introduced text file that lets website owners control how AI models interact with their content. Just like robots.txt instructs search engine crawlers on which pages to index, LLMs.txt provides rules specifically for Large Language Models (LLMs) like OpenAI’s GPT, Google’s AI Overviews, and Perplexity AI.

Why Was LLMs.txt Introduced?

The rise of AI-powered search engines has sparked a major concern:

- AI models scrape and train on website content without explicit permission.

- AI-generated answers in search results reduce organic traffic to the original source.

- Website owners lose control over how their content is used, leading to issues of content ownership and visibility.

In response to these challenges, LLMs.txt was introduced as a voluntary mechanism to give website owners a say in whether AI models can access, train on, or display their content in AI-generated search results.

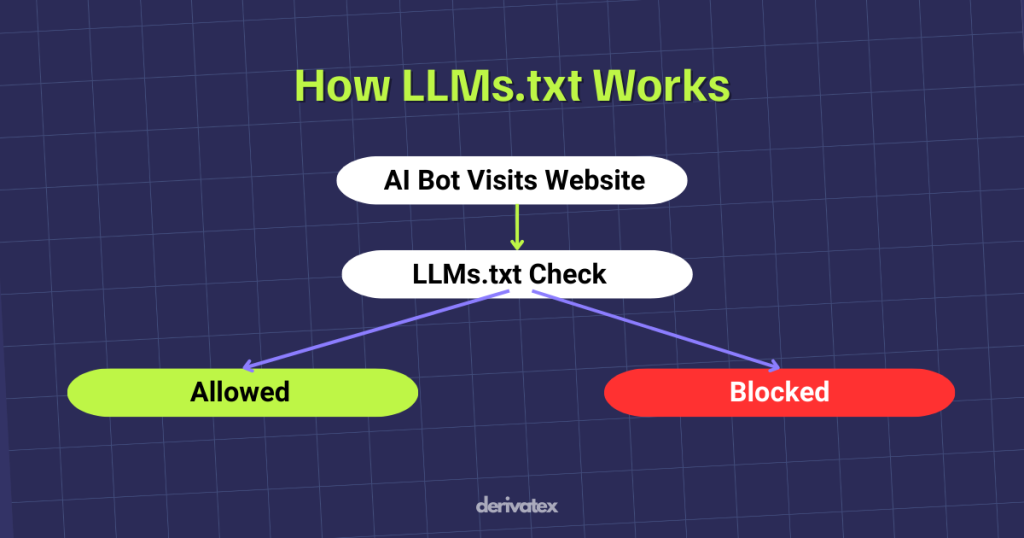

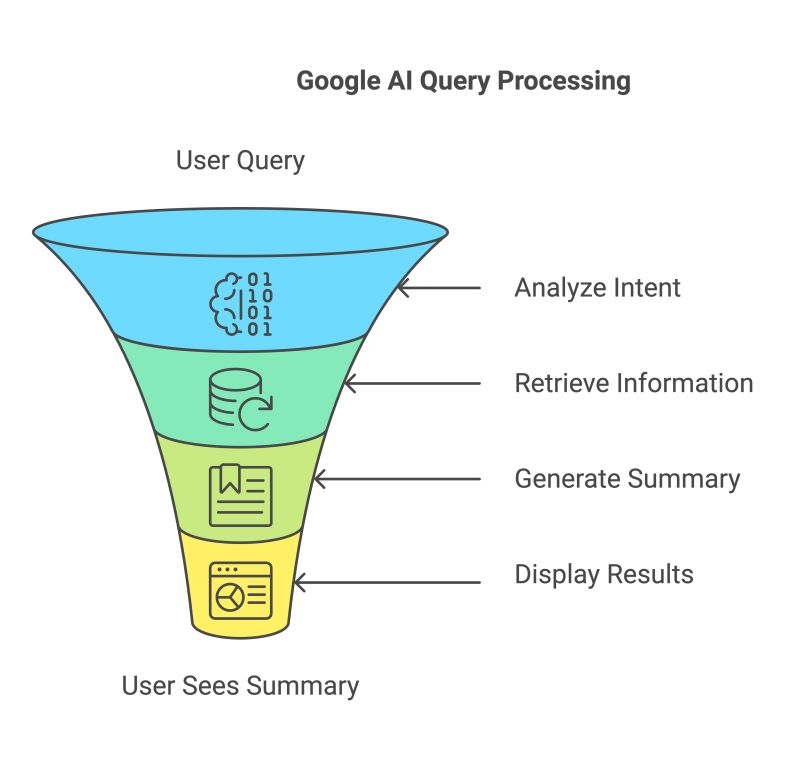

How Does LLMs.txt Work?

At its core, LLMs.txt functions similarly to robots.txt. Website owners can add an llms.txt file to their site’s root directory with specific rules that:

✅ Allow or disallow AI bots from scraping content.

✅ Specify permissions for individual AI models (e.g., block OpenAI but allow Google’s Gemini).

✅ Prevent AI-generated search results from replacing organic links.

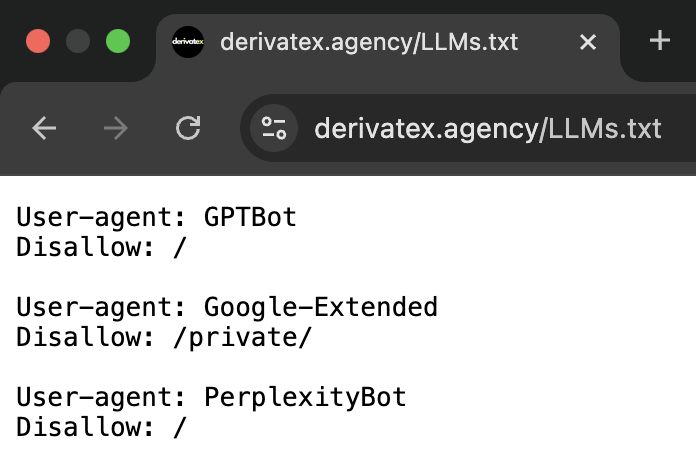

A basic LLMs.txt file might look like this:

User-agent: OpenAI

Disallow: /

User-agent: Google-Extended

Allow: /

This means:

- OpenAI’s crawlers are blocked from accessing the website.

- Google’s AI models (Google-Extended) are allowed to use the content.

Here is a list of AI Crawlers That Respect (or Ignore) LLMs.txt (Visual Table):

| AI Model | Follows LLMs.txt? | Notes |

|---|---|---|

| Google-Extended | ✅ Yes | Controls AI search indexing |

| OpenAI | ❌ No | No official enforcement |

| Perplexity AI | ⚠️ Partial | Mixed reports from users |

With AI search becoming more dominant, LLMs.txt gives website owners the power to decide whether AI can leverage their content or not.

Why Does LLMs.txt Matter for SEO?

As AI-powered search evolves, LLMs.txt isn’t just a technical update—it’s a strategic move for website owners looking to protect their SEO investments. Here’s why it matters:

1. Protects Your Content from AI Scraping

AI models scrape web content to improve their responses, but they don’t always credit the original source.

This means:

- Your content fuels AI-generated answers, but users never visit your site.

- AI platforms train on your content for free, using your hard work to enhance their models.

By implementing LLMs.txt, you can control which AI models have access to your content, preventing unauthorized data extraction.

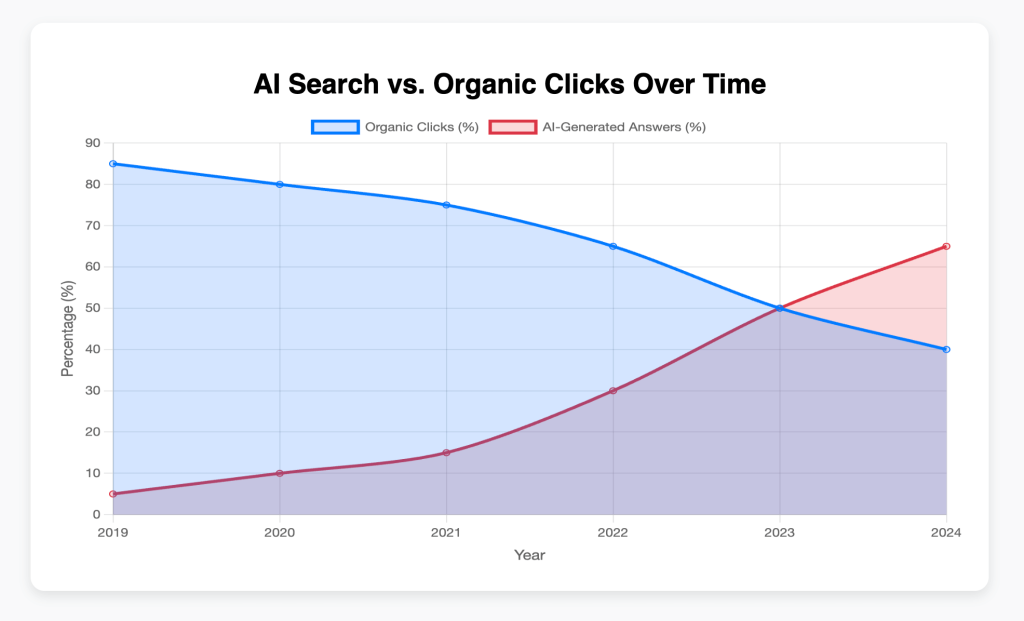

2. Helps Maintain Organic Traffic & SEO Visibility

AI-generated search results are designed to provide instant answers, reducing the need to click on search results.

- If Google’s AI Overviews directly answer a query using your content, you might lose valuable traffic.

- If AI models summarize your blog posts without a link, your website loses brand visibility and authority.

Blocking AI bots through LLMs.txt ensures your content remains exclusive to your site, forcing users to visit your pages instead of consuming AI-generated responses.

3. Gives You Control Over AI Attribution

Not all AI-generated content is bad—some platforms cite sources and drive traffic back.

- Google’s AI Overviews might link back to original sources in some cases.

- AI-powered search engines like Perplexity may quote and credit website content.

LLMs.txt lets you selectively allow AI models that align with your SEO goals while blocking those that don’t.

4. Forces AI Companies to Respect Publisher Rights

Right now, AI models scrape websites without asking for permission.

- Publishers have no legal control over how AI companies use their data.

- AI platforms profit from your content, but you get nothing in return.

LLMs.txt is the first step toward establishing clear boundaries for AI-driven search. As AI evolves, search engines and content creators will need to define ethical AI scraping practices—and LLMs.txt is leading that conversation.

How to Use LLMs.txt (Step-by-Step Guide)

Implementing LLMs.txt is straightforward, but doing it correctly ensures AI models respect your content boundaries. Follow this step-by-step guide to set up and optimize your LLMs.txt file.

Step 1: Create an LLMs.txt File

Like robots.txt, LLMs.txt is a plain text file. To create one:

- Open a text editor (Notepad, VS Code, etc.).

- Save the file as

llms.txt. - Place it in your website’s root directory (e.g.,

yourwebsite.com/llms.txt).

Step 2: Define AI Crawlers & Permissions

Inside your llms.txt file, you’ll define which AI models can access your content and which cannot. Here’s a breakdown:

✅ Allowing an AI bot access to all content:

User-agent: Google-Extended

Allow: /This permits Google’s AI models to use your content for AI-generated search results.

❌ Blocking an AI bot from your site:

User-agent: OpenAI

Disallow: /This prevents OpenAI (ChatGPT, GPT-4, etc.) from scraping your website.

???? Customizing access for different bots:

User-agent: Perplexity

Disallow: /private-content/

User-agent: OpenAI

Disallow: /Perplexity AI is blocked from accessing /private-content/ but can crawl the rest of the site.OpenAI is blocked completely.

Step 3: Use a Complete List of AI Crawlers

To ensure your content is protected, consider blocking or allowing AI bots individually. Here are some commonly known AI crawlers you might want to include:

| AI Company | Crawler Name |

|---|---|

| OpenAI | OpenAI |

| Google AI | Google-Extended |

| Perplexity | Perplexity |

| Anthropic (Claude AI) | Anthropic-llm |

| Common Crawl (used by many AI models) | CCBot |

| Microsoft AI | BingAI |

If an AI model doesn’t respect llms.txt, it’s a red flag—indicating unethical scraping.

Step 4: Upload LLMs.txt to Your Website

Once your file is ready:

- Access your website’s root directory (via FTP, cPanel, or hosting dashboard).

- Upload

llms.txtto the main directory (whererobots.txtis). - Verify it’s live by visiting

yourwebsite.com/llms.txtin your browser.

Step 5: Monitor & Test AI Compliance

Some AI companies may not respect LLMs.txt fully. To check:

- Google Search Console → Review traffic changes from AI-generated results.

- Server Logs → Identify AI crawlers ignoring your LLMs.txt rules.

- Perplexity & AI Search Engines → Check if your content still appears in AI-generated answers.

What If AI Companies Ignore LLMs.txt?

Since LLMs.txt is not legally enforced, some AI models might still scrape content. If that happens:

- Use paywalls or login requirements for sensitive content.

- Add copyright disclaimers within content.

- Monitor AI-generated search results and report unauthorized use.

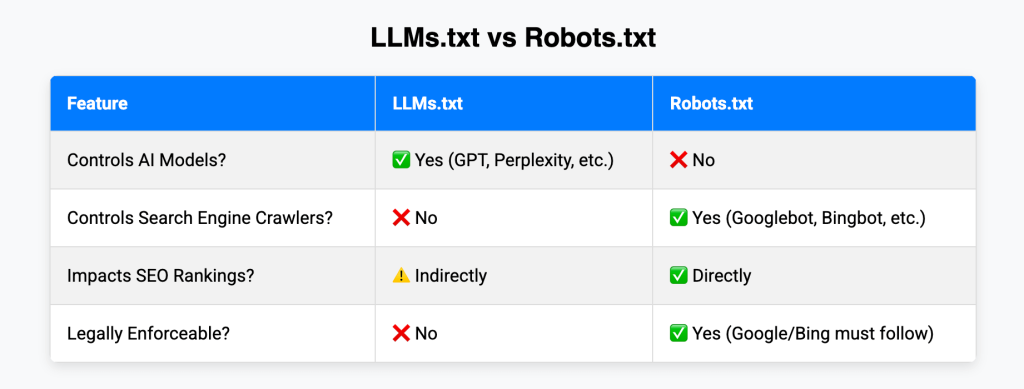

LLMs.txt vs Robots.txt: What’s the Difference?

At first glance, LLMs.txt might seem like just another version of robots.txt, but they serve different purposes in the evolving digital landscape. Let’s break it down:

Similarities Between LLMs.txt & Robots.txt

Both files:

✅ Help control bot access – They tell specific crawlers which parts of a site they can or can’t access.

✅ Are placed in the root directory – The format and location (yourwebsite.com/llms.txt or yourwebsite.com/robots.txt) are similar.

✅ Use “User-agent” rules – Both files allow or disallow certain bots from crawling content.

Also read: Common Robots.txt Mistakes That Hurt SEO (And How to Fix Them)

Key Differences Between LLMs.txt & Robots.txt

| Feature | Robots.txt | LLMs.txt |

|---|---|---|

| Purpose | Controls search engine crawlers (Googlebot, Bingbot, etc.) | Controls AI models (ChatGPT, Perplexity, Claude, etc.) |

| Who It Affects | Search engines ranking your content in SERPs | AI bots training on or using your content |

| Impact on SEO | Directly affects indexing and rankings in Google & Bing | Prevents AI-generated results from replacing organic traffic |

| Enforceability | Mostly respected by major search engines | Voluntary compliance by AI companies (not legally binding) |

| Effect on Content | Prevents search engines from indexing/discovering certain pages | Blocks AI bots from scraping or training on your content |

Should You Use Both LLMs.txt and Robots.txt?

YES. They serve different functions, and using both ensures maximum control:

- Use

robots.txtto guide search engines on what to index for rankings. - Use

llms.txtto control AI access and protect content from AI scrapers.

For example:

- You might block OpenAI in LLMs.txt to prevent ChatGPT from using your content while…

- Allowing Googlebot in robots.txt to ensure your content ranks in search results.

Best practice: Implement both files strategically to ensure that your content ranks in search engines but isn’t exploited by AI models.

What If AI Bots Ignore LLMs.txt?

Unlike robots.txt, which Google & Bing follow, LLMs.txt is still voluntary—AI companies might ignore it.

- Google has committed to respecting Google-Extended rules.

- OpenAI allows opting out but doesn’t guarantee compliance.

- Perplexity and other AI search engines have mixed records.

Bottom Line: LLMs.txt is a step toward content protection, but it’s not a silver bullet. Combine it with stronger SEO, copyright notices, and monitoring to fully safeguard your content.

Limitations & Challenges of LLMs.txt

While LLMs.txt is a promising step toward controlling AI content usage, it has limitations that website owners must be aware of.

1. AI Companies Can Ignore LLMs.txt

Unlike robots.txt, which search engines must follow to stay compliant with web standards, LLMs.txt is voluntary.

- Some AI models may choose to ignore it and scrape content anyway.

- There is no legal enforcement requiring AI companies to comply.

- AI startups and rogue scrapers might bypass LLMs.txt entirely.

Solution: Monitor AI-generated search results to check if your content is being used without permission.

2. LLMs.txt Doesn’t Remove Content Already Scraped

Blocking AI bots won’t delete content that has already been used for training.

- If an AI model scraped your content before you implemented LLMs.txt, it can still generate responses based on it.

- OpenAI, Google, and other AI models don’t offer a way to retroactively remove trained data.

Solution: If you suspect your content has been scraped, you may need to file DMCA takedown requests or explore legal action.

3. No Universal Standard Yet

Unlike robots.txt, which follows clear rules set by Google, LLMs.txt is new and inconsistent across AI platforms.

- Each AI company defines its own policies on how (or if) they respect LLMs.txt.

- Some AI models don’t have official documentation on whether they comply with LLMs.txt.

- The industry lacks a standard enforcement framework, making adoption unpredictable.

Solution: Keep an eye on AI policies. Google-Extended, OpenAI, and Perplexity have provided guidelines, but others are still unclear.

4. LLMs.txt Doesn’t Prevent AI Summary Hijacking

Even if AI bots don’t scrape your site, AI-powered search results may summarize your content without direct scraping.

- Google’s AI Overviews and Perplexity’s AI search generate summaries from multiple sources.

- Even if you block an AI bot, your content could still influence AI-generated search results indirectly.

Solution: Strengthen your brand authority and ensure AI models reference you properly through citation strategies.

5. LLMs.txt Is Not a Long-Term SEO Fix

AI-driven search is changing how people find information.

- SEO is shifting from blue links to AI-generated answers.

- Even if AI bots respect LLMs.txt, user behavior is changing—many users may prefer AI-generated summaries over clicking links.

Solution: Future-proof your content by focusing on brand visibility, entity SEO, and unique value propositions that AI cannot easily replicate.

The Reality Check: Is LLMs.txt Worth Using?

✅ Yes, if you want to limit how AI models scrape and use your content.

✅ Yes, if you care about controlling AI access while still ranking in search.

❌ No, if you expect it to be a bulletproof content protection tool—it’s not enforceable across all AI models.

LLMs.txt is a piece of the puzzle, but not the entire solution. SEO strategies must adapt beyond just blocking AI bots to remain competitive in an AI-driven search landscape.

Read our case study: How We Ranked #1 on ChatGPT for “Best Martech SEO Agency”

Final Thoughts: The Future of AI & SEO

AI search is here to stay. Website owners must embrace the shift, ensuring their content is either:

- Protected from AI misuse (via LLMs.txt & legal measures)

- Optimized for AI search visibility (through citations, branding, and expertise)

SEO is no longer just about ranking in Google—it’s about controlling how AI presents your content.

FAQs on LLMs.txt & AI SEO

-

What is LLMs.txt, and why was it created?

LLMs.txt is a text file that allows website owners to control how AI models access their content. It was introduced as a response to AI scrapers training on web content without permission, reducing organic traffic to original websites.

-

How does LLMs.txt impact SEO?

LLMs.txt prevents AI bots from using your content for AI-generated search results, helping you maintain organic traffic. However, AI-generated summaries can still affect click-through rates, so it’s not a complete fix.

-

How do I set up an LLMs.txt file?

1. Create a new text file called

llms.txt.

2. Add rules to allow or block AI bots (e.g.,User-agent: OpenAI Disallow: /).

3. Upload it to your website’s root directory (yourwebsite.com/llms.txt).

4. Monitor AI compliance to see if bots respect your settings. -

Can AI models ignore LLMs.txt?

Yes. LLMs.txt is not legally binding, so AI companies can choose to ignore it. While Google and OpenAI have some level of compliance, other AI models may not follow the rules.

-

Should I block AI bots using LLMs.txt?

It depends. If you want to protect your content from being scraped, blocking AI bots is a good move. However, if your goal is to be visible in AI-driven search results, allowing certain AI models might be beneficial.

-

Does blocking AI bots prevent AI-generated answers?

Not entirely. Even if AI bots can’t scrape your content directly, they may still generate answers using secondary sources that reference your content.

-

Will LLMs.txt become legally enforceable in the future?

Possibly. As AI-generated search grows, publishers may demand stronger legal protections against unauthorized scraping. Governments and tech companies could introduce industry standards or laws around AI content usage.